Devdroplets ♥ Traefik

Have you ever wondered: "how many servers it takes to deploy a lightbulb?" Of course not, that would be quite strange. However, we have. If the lightbulb was a web service and then deploying it to the worldwide web. More precisely, how do we provide the services as we do now without having to have a hundred addresses, but only one? The short answer: Reverse proxying with a single point of entry. The somewhat longer answer: Traefik and some heavy time-consuming configurations.

Reverse proxying, the simple answer

If you have ever been in the web content serving community, you might have picked up the magic word of reverse proxying. If not, you might have heard of a proxy, the annoying thing some applications ask if you have before finishing your installation. Before you go thinking it is the same, it is. But also not, because it is reversed. Wiki says the following about this subject:

In computer networks, a reverse proxy is a type of proxy server that retrieves resources on behalf of a client from one or more servers. These resources are then returned to the client, appearing as if they originated from the proxy server itself.

- Wikipedia, https://en.wikipedia.org/wiki/Reverse_proxy

And I couldn’t have explained it better. In simple; you ask the reverse proxy server the website you would like to have (for example example.com, very creative) and within its network, it will ask the actual webserver. It makes the exact same request you did, and when returned, it sends it back to you as if it were his content.

Traefik, the not so simple answer

Traefik is an open-source reverse proxy and load balancer for both HTTP and TCP requests. It makes deploying dynamic applications and/or microservices easy with its automated discovery process. It works well with static deployment methods like static servers. But with the extra feature for the discovery of services. It can see if you have deployed services via for example Kubernetes, Mesos and, in our use-case, Docker. It also manages certificates for these web services, using both wildcard certificates, as well as custom domain certificates.

Traefik is created by Containous, a company that provides cloud tooling and consulting to companies. Traefik is used by, for example, Mozilla, rocket.chat and New Relic.

Our environment

We use Docker containers to deploy edge services. Within these containers, web-servers are running to deliver products to a specific port or, for mostly third-party software, default port 80. We will make the assumption that all of the deployed products will be under a single domain name with a unique subdomain. Accessible via their unique FQDN, or written out fully; their unique fully qualified domain name. A fancy word of saying, the full hostname/domain name of the website. Having many different containers with the same amount of different unique FQDNs makes deploying a static configurated reverse proxy quite tedious. It also makes expanding a very wearisome task, as we have more servers ready to be deployed whence needed.

Other considerations

Before using Traefik, we also considered using other solutions. Some were used in production, whilst others were only used on paper:

Different KVM servers

Before we started using docker and had only a couple of services, I thought of using KVM virtualization for deploying different servers within one casing. This required a KVM hoster to be installed upon the server itself. This consideration was due to having a lot of experience with KVM virtualization and VMware's virtual server.

I never actually did this, as that having to reinstall the server was a big task that I had no appetite for, and the value proposition was not that great. Running a KVM server is compute and memory heavy and the server we used at the time didn’t have much of either. For only a couple of small services, this would be no problem. Even after upgrading (which we did a few weeks later), it would have been a hassle to maintain. So with that, I moved on to another solution.

Reverse proxying with NGINX

Having set up a single server with Ubuntu-server headless. We started using NGINX to manage the reverse proxying for the whole infrastructure. This worked quite well, as we only had three instances running simultaneously. Configuring these were as easy as three code blocks within the main configuration file.

However, as we started to grow in services, I started having trouble with this huge configuration file that had a lot of code to show. I fixed this temporarily using the `conf.d` directory. This did not make deployment easy, however. With this solution, I had to configure everything almost manually. This made deploying quite annoying and tedious.

Yxorp-dd

As an alternative to all of these solutions, I made a small PoC for creating my reverse proxy service. This service would run on the same Docker server as a container and would manage the NGINX configuration. The project was called yxorp and can be found on my git.devdroplets.com’s repository explorer.

After having used this for a couple of weeks. I determined that this PoC was just that, a proof of concept and nothing more that should be used within production. The code wasn’t clean and the usability wasn’t near to what I needed it to be. I stopped using and maintaining Yxorp project and started looking for alternatives to this. It was then that I stumbled upon Traefik. A deployment which I still use today.

Treafik automation

To automate deployment, Treafik watches the Docker socket constantly for a new container to be deployed. Every five seconds, Treafik looks for a new container. When one is found, the labels are retrieved. There are two labels required when deploying a Docker container to the internet with Traefik.

For Traefik to accept the container, the label “label.subdomain” needs to be set to a unique String. This label is used to define to the subdomain of our service. If this label is configured within the docker container's label, Traefik will automatically expose it to the default entry point: HTTP.

The second label is only there to make sure the HTTP entry point is used instead of the default HTTPS one. This label enables TLS within the deployed router. It can be set via "traefik.http.routers.[subdomain].tls" and must be set to "true". This way, Traefik enables the router of that container to the HTTPS entry point and automatically encrypts it with the certificate.

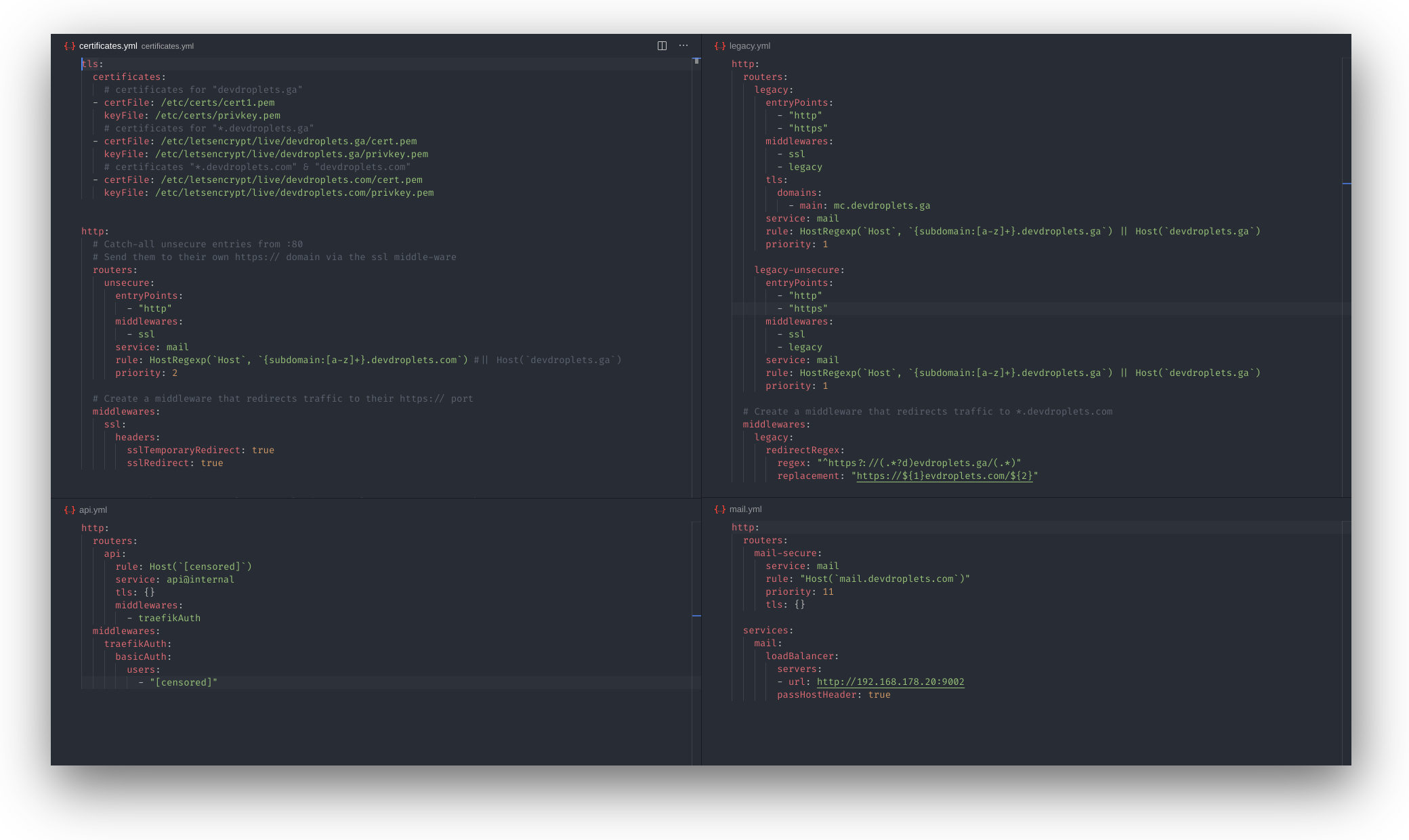

Static configurations

Almost every web server configuration is set automatically with Docker containers. This is due to the automated process configured pre-deployment with Traefik. However, there are some services in need of its dedicated configuration file. There are, as of now, four configurations pre-configured within our Treafik configuration directory:

API. This is the file that creates the route for external dashboard access of Traefik. It lets me see the Traefik dashboard from anywhere with basic authentication.

Certificates. Within this file, the certificate files for secure HTTPS connections are managed. It tells Traefik where to find the certificates, as well as to redirect all Traefik coming from the non-secure entry point to its secure associate.

Legacy. This configuration is for the temporary TLD migration from .ga to .com. It tries to redirect traffic that enters with a .ga TLD to the same domain with a .com TLD. This configuration will be replaced with its web server container in the near future. Telling users of the migration itself.

Mail. The last configuration is for the mail service provided for Devdroplets applicants. This can not be automatically deployed with a single docker container due to technical reasons. That is why it has got its own dedicated configuration file.

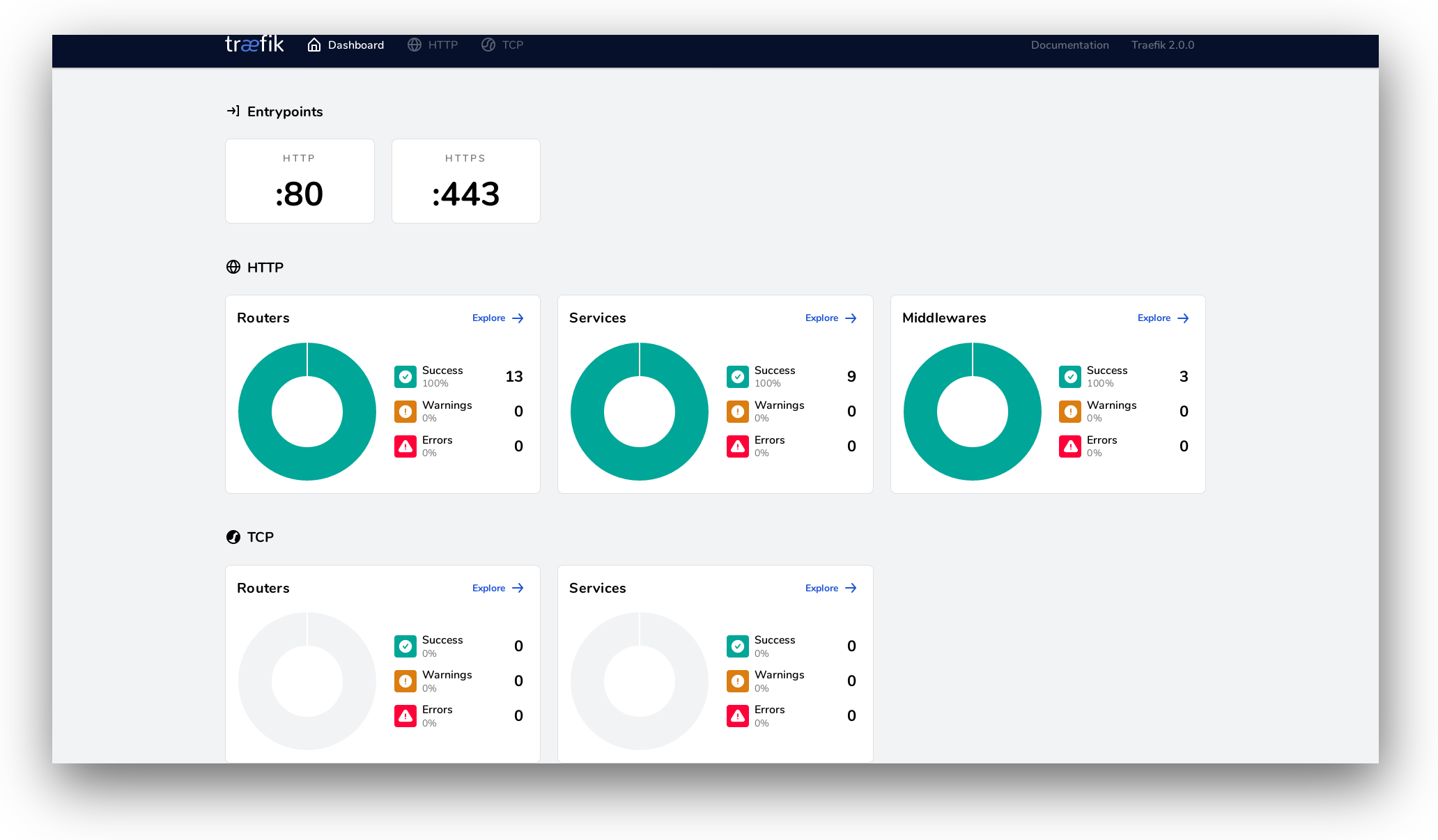

So if you put everything together, you’ll have a beautiful structure not seen by the normal customer (hopefully). However, we can have the pleasure of knowing that it works without touching any configuration after having it set up correctly. However, if for any reason you weren’t to believe any of this, and think that this whole document is a forgery. Then first of all: You might have some trust issues, man. And second of all, here is the beautiful dashboard provided by Traefik:

Final word

Personally, I'm quite happy with this integration style. It makes deploying web services as easy as putting in some files and letting the system do the rest. This lets me focus more on having a quality product rather than worrying about deploying my code.

So if we were to go back to the main question: "It takes a single server to screw in a lightbulb, but a butt load of time is needed for this"