Yet another guide to Neural Networks

This is a theoretical introduction to Neural Networks. This introduction is meant to help gain intuition and help out with common issues. Not to get you going with any framework in particular.

[Disclaimer of problem classification]

The fundamental theory

Neural networks are but transformations

Recommended: You can create and test your own network online.

One thing you should know before we start is that a neural network is nothing more than a bunch of transformation, in the same way that f(x) = x * 2 or g(x) = sin(x[0]) are transformations. But instead of using constant functions, we leave certain variables to be adjusted in a process we call 'training'.

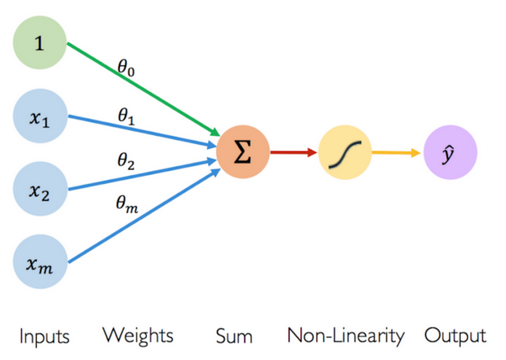

A 'trainable' transformations are generally done using Neurons which is are a weighted sum, where the weights are trainable parameters, going into a non-linear function:

At the base of all neural networks stands the Universal Approximation Theorem. This theorem states that any continuous function can be approximated using a single Fully Connected Layer (aka Linear or Dense layer) with finite amount of neurons. A fully connected layer, meaning all neurons of layer i are connected to all neurons of layer i+1.

This is not very efficient though, as finite doesn't mean 'few'. And there are a lot of other caveats, with using a single layer.

A flow of transformations

One popular framework has a very appropriate name Tensorflow. Tensor being an n-dimensional array, and flow being the transformations of those tensors as it goes through the model (The exact definition of a tensor is different but not in the scope of this article).

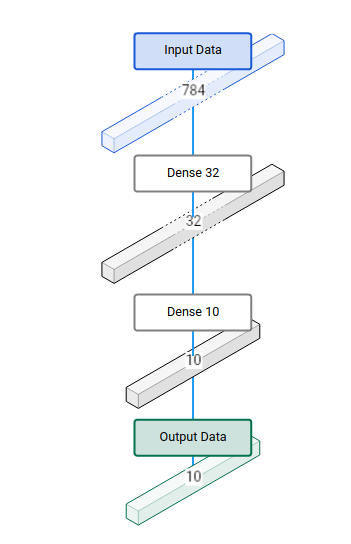

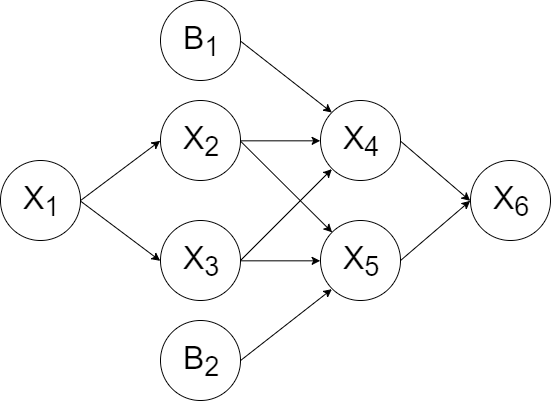

This 'flow' might be visualized using a diagram like this:

And this is how you could, and probably should visualize transformations of your own models going forward. This will help keep your sanity.

If the shape of a tensor is of 'higher rank' (e.g. 2D, 3D, etc.) the shape will be a list. For example Tensor (2, 3, 5) might be a float[2][3][5] when programming.

All layer-transformations, that aren't the input or output, are commonly called hidden layers. All layers between the input and the output are not 'interpretable'. In this sense neural networks are a Black box.

How can it be trained?

For a Neural Network to work every transformation needs to be differentiable. This is so we can preform gradient descent; basically looking at the result an seeing how we can get it to be lower.

This is why all neural networks end with a Loss function, which the program can try to make as low as possible. The loss function might be something like:

$$loss(x, y) = |y - x|$$

Where x is the result from your model, and y is the target result (aka your data). This loss function is just the difference of the two values, meaning you want x and y to be close together.

Note that most /functions/ are differentiable by nature, see here for some non-differentiable functions. Non-differentiable functions that are continuous can still be used, but might require more 'effort' to implement.

While the functions need to be differentiable you are free to introduce constants, or even 'destruct' variables. (e.g.2*x,x = max(x))

Using this it can differentiate the entire model, and optimize loss(x,y).

In this example we use the simplest loss function possible, but there are (a lot better) standard loss functions for most tasks.

Visualizing the training

Recommended: See this kind of process in action at the Tensorflow playground.

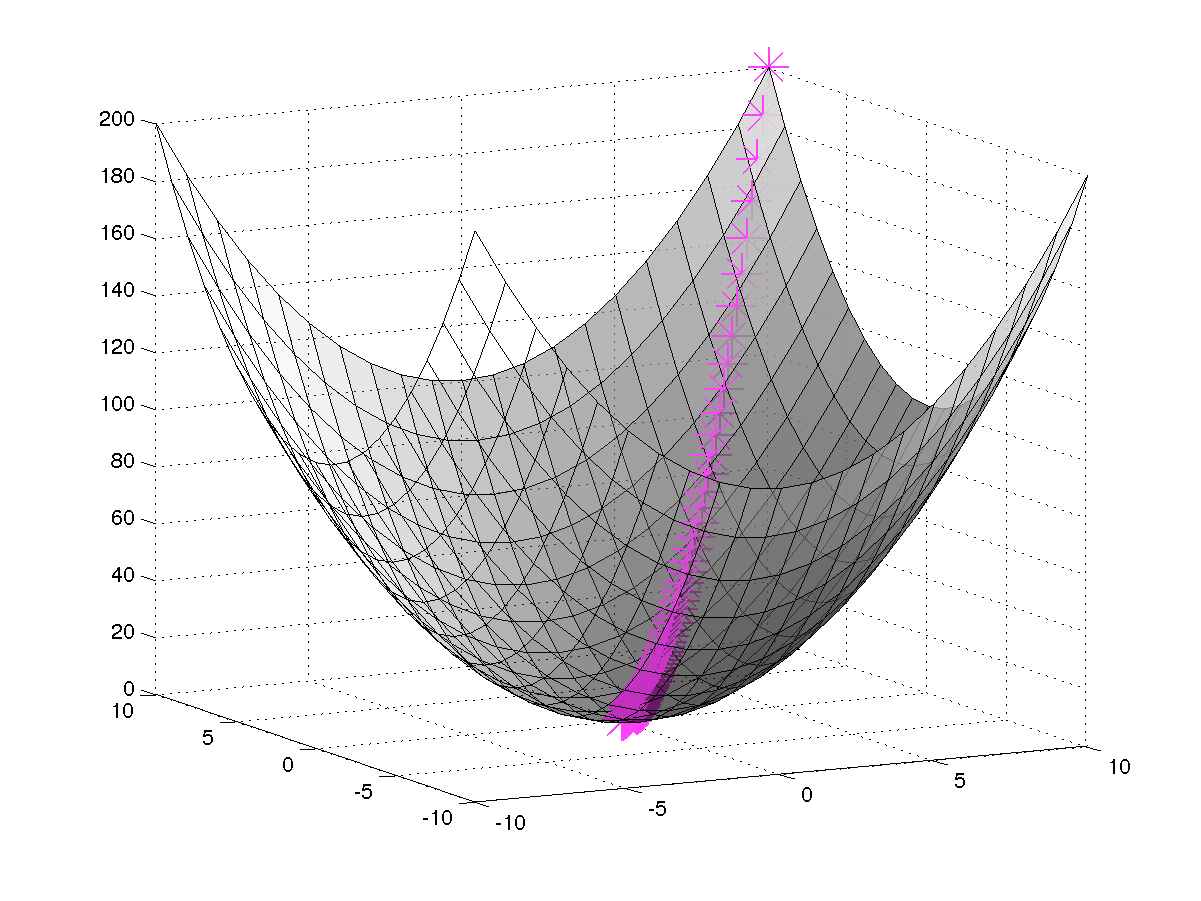

A loss function, in the simplest sense may visualized as a simple parabolic function, where the horizontal axes are parameters (2 parameters being trained in this example), and the vertical axis is the resulting loss:

However when working with real data, and a lot more parameters this will be a nigh impossible to visualize. And even when only viewing 2 parameters of a more complex function, the graph will be looking a lot more chaotic.

'Fit' vs Fit

This section applies to all of machine learning (not just neural networks).

See the venn diagram.

Before we continue learning about how to improve upon these basic principles I want to take the time to discuss 'fitting'. This is an alternative way to say 'training', but it more accurately refers to the process of having your model fit your data.

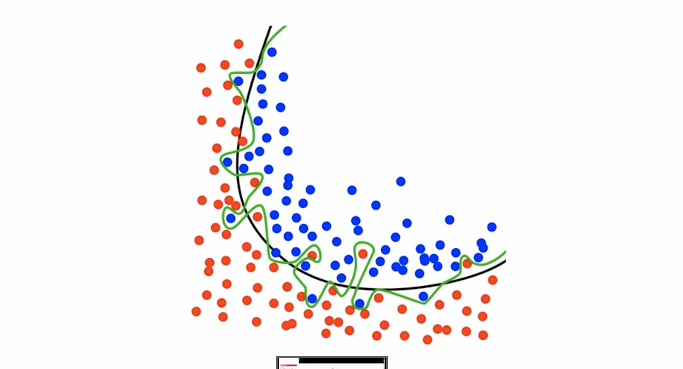

In fitting it is important to remember what you want achieve with the model. You might exactly fit the data, but have lost all 'intelligence'.

When your model fits the training data, but not the actual goal we call this overfitting. What happens is that your model is learning what training data fits with what answer, and not how to properly execute the task you meant to give it.

The most common cause of overfitting is having too many parameters. This can usually be fixed by reducing the amount of layers/neurons.

And this is one of the caveats of the Universal Approximation Theory, it can approximate any function, and so it can basically 'store' the data:answer pairs if you give it enough neurons.

When creating your own neural network it's useful to verify that the 'parameter count' is appropriate.

You can detect overfitting by keeping some amount of test or validation data which isn't included in the training process; if the error on the test data is a lot higher than the training data it has most likely overfit.

Combining multiple layers

One of the fundamental concepts you'll find everywhere in the field is Activation Functions, this is a non-linear function which all values get passed through.

The reason you need an activation function is to 'activate' your result, to put it simply. If you didn't, your entire model could be simplified to a single layer. You can see the simplification as equivalent to 8 * 4 * 2 == 64; when the transformation is linear you can also just factorize and simplify, that's why we want a non-linear function.

So without the activation function:

1) A lot of computation is wasted

2) It will be extremely difficult to solve a non-trivial problem

3) Your model will be harder to train

Choosing the right activation function (over the default), can also improve your model accuracy; although this should be as a fine-tuning step, as the 'default' activation function is often a good one.

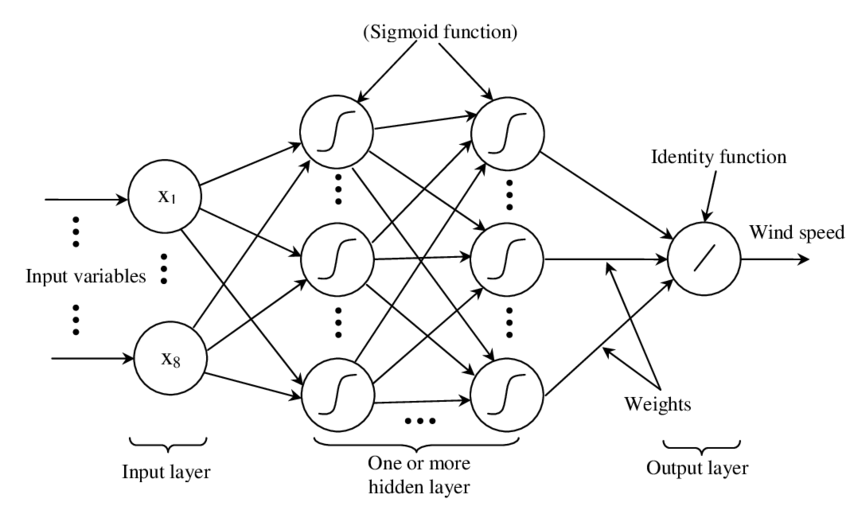

When we put together multiple layers of neurons we call this a Multilayer Perceptron or MLP. A simple fully connected MLP might look like this:

Added bias

If you've read previous sections you should be familiar with the fully connected layer. However there is still one thing which exists in pretty much all non-toy implementations of the layer: bias.

While it's usefulness in modern neural networks is debated, it is still commonly added. The idea behind it is that the output of the model can be a bit more flexible if there is some fixed value added to each neuron.

Bias is always a number which is added in addition to all other inputs. This value doesn't have a weight, but is itself a parameter.

Practical

End-to-End training

One thing you often find in papers is models that are End-to-End trained, this essentially means that the model runs the entire task. This is very beneficial as it simplifies the theory behind the model.

This will often make the training code very simple:

y = model.forward(x)

model.backward(loss(target_y,y))

# Or in higher abstraction frameworks

model.fit(data, labels)Whenever possible you want to design your neural network to be end-to-end trainable.

Measuring your model

Evaluating your model can be very important when using neural networks in the real world. But there are some things to consider when doing so.

The 'default' way to evaluate a classification model is to test it's accuracy over a test set. Accuracy being the ratio of right vs wrong answers it has given when provided with a classification problem. For other tasks the metric might be different.

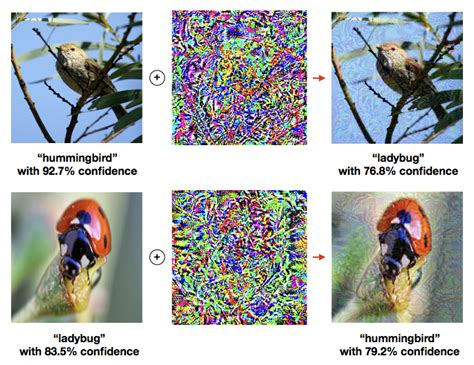

However neural networks aren't like regular programs or actual intelligence, so it will fail in unexpected ways. For example using Adveserial networks you can trick a model to into changing it's identification:

And so when you are using a neural network to do some task you should consider it a black box which probably gives you an OK answer.

There are ways to help resolve this [1, 2], but this is still on-going research.

For now a decent way to validate your model is to create some acceptence criteria and check those on data that isn't in the training set.

And if you are willing to go the extra mile, you can set up your own adveserial network, and retrain your model based on the newly generated adveserial data.

The new

[Opinions, increasing scale, efficient rather than accuracy, relevance]

[Normalization; Batch, Group, Instance, Layer]

[Encoding; one-hot; creativity; e.g. Mel-scale, *2Vec]

[Embedding; transfer-learning; clustering/triplet/pair]

[Convolutions; dilation; mobility; locality]

[Dynamic Networks; Deformable conv; define-by-run]

[residual; dense-interconnect; vanashing gradient; only with many layers]